Open AI is a company focused on creating AI ( Artificial Intelligence ) tools and software which will help humans to solve complex problems and collaborate with other organizations helping and sharing their research for the betterment and safety of Humanity. Research and patents made by the company are intended to remain open to the public except in cases where they could negatively affect safety. The company was founded in 2015 by Elon Musk and Sam Altman and some other investors with its headquarters in San Francisco, California.

The mission they work for and I quote is :

OpenAI’s mission is to ensure that artificial general intelligence (AGI)—by which we mean highly autonomous systems that outperform humans at most economically valuable work—benefits all of humanity.

The core purpose of Open AI is to work in Artificial General Intelligence ( AGI ) also known as Strong Intelligence. The AGI is the field where computers are trained to solve any problems that human faces and to solve them more accurately than humans in the best way possible.

To know more about AGI: capabilities, abilities, and AGI vs AI, weak AI please read this article by Ben Lutkevich on Tech Target dot com

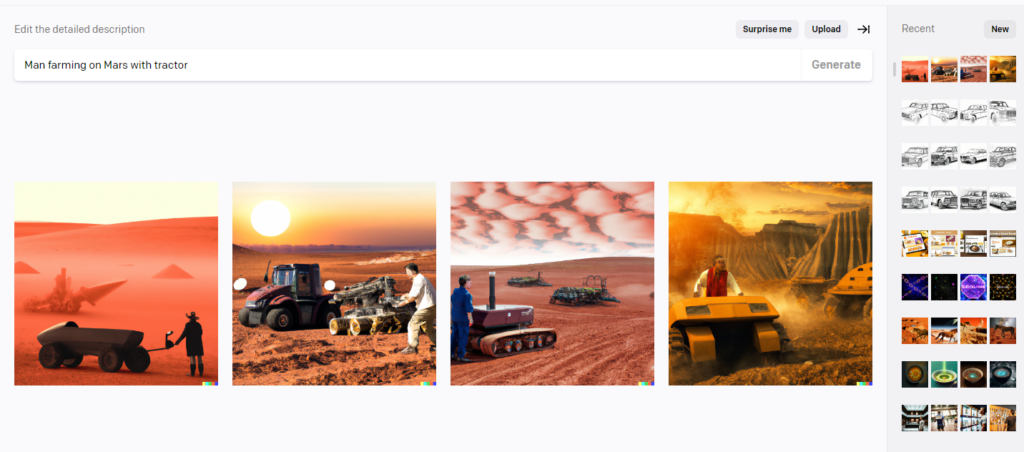

Open AI had many different projects already launched and with each launch, it surprises the world and creates a buzz around it. The recent one and my reason to write this was the project DALL-E. DALL-E is an AI tool that helps to edit or create an image with natural language instructions. Its upgraded version DALL-E 2 just got launched and I happen to get early access to this tool.

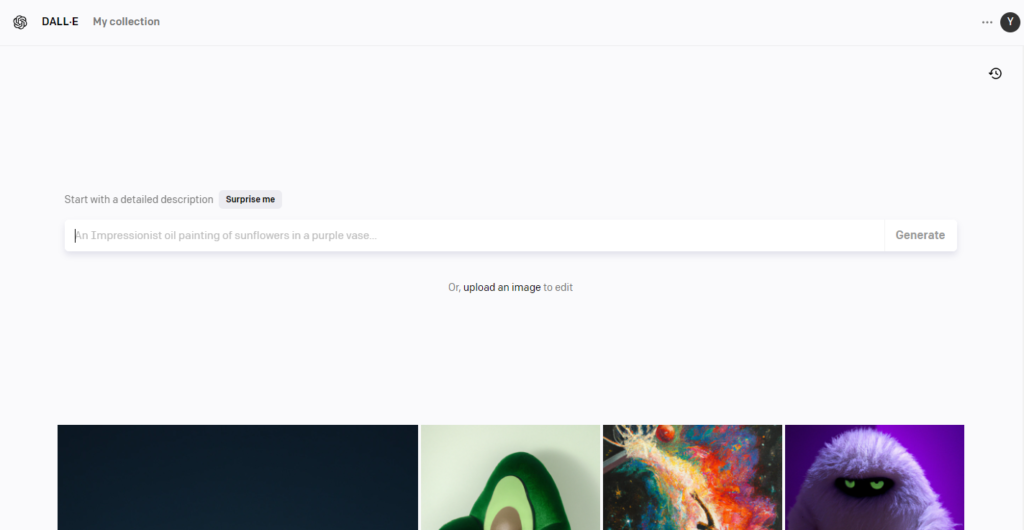

This was Invite only tool and I happened to be on the waitlist since I had registered for it ever since I had heard about it. The UI of the site is as simple as it can be. Just an input box and generate button and the magic happens.

The transcition from DALL-E1 to DALL-E 2 is on diffrent level. The progress has been great, the real object with the imaginary things which we type gets generated in seconds and this is done using “diffusion”.

As per the Open AI team and I quote :

DALL·E 2 has learned the relationship between images and the text used to describe them. It uses a process called “diffusion,” which starts with a pattern of random dots and gradually alters that pattern towards an image when it recognizes specific aspects of that image.

The Buzz around DALL-E 2 and My Opinion

The more noise that this tool generated was that in time coming it would take the graphics designer’s jobs away. Since it gets the results out in seconds to whatever we write to it. The AI does the job well but it does not satisfy the outcomes and there is always a level of correctness we need to apply as humans. We can treat the generated images as mock-up designs (High-level designs) but we cannot consider them as final designs or ready to use for business/production purposes.

This is a training model and in beta version, We need to train this model with a huge amount of data sets, and images and make it proficient in natural language processing. This would be too early to speculate about AI taking anyone’s job away.

Learn any skills and as Steve Jobs said it’s always about connecting the dots. He took calligraphy classes and Introduced us to different kinds of computer fonts. We use this for our enhancements for our projects, Websites and similarly, we will use AI for enhancements.

Technically if you are more eager to read about DALL-E please go through these links:

Aditya Ramesh ( Creator of DALL-E): http://adityaramesh.com/posts/dalle2/dalle2.html

Sam Altman blog ( co-founder Open AI ): https://blog.samaltman.com/